OUR PATENTED SYSTEM

IMMERSIVE VIBES bridges two worlds - giving AI the ability to perform not as a machine, but as an empathic creative entity.

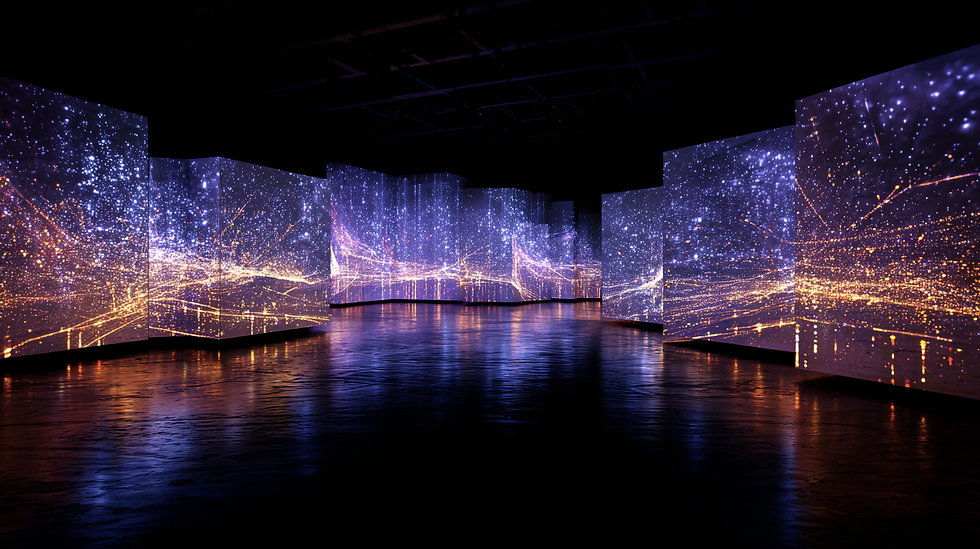

Our proprietary technology integrates AI, AR, and haptic systems to create a fully immersive, real-time sensory experience.

The platform enables digital and human artists to interact with audiences in ways never before possible.

KEY COMPONENTS

AI Engine - Analyzes biometric data such as heart rate, motion, and emotion to adapt sound, light, and haptic feedback live.

AR Visual System - Generates spatially aware visuals that respond to rhythm, intensity, and energy.

Haptic Feedback Layer - Delivers tactile sensations through vests, suits, or wristbands synchronized with sound and visuals.

Emotion Recognition System – Reads audience reactions through camera-based analytics and dynamically adjusts performance flow.

Metaverse Integration – Enables fully synchronized virtual shows with NFT ticketing and blockchain-based engagement.

HOW IT WORKS

-

1. AI Emotional Engine

A proprietary model developed to interpret biometric and behavioral data in real time - reading heart rate, movement, audience energy, and emotional response to dynamically adjust visuals, music, and tactile sensations. -

-

2. Haptic Feedback Architecture

Using advanced haptic systems from Woojer and bHaptics, we deliver both collective (venue-wide) and personal (wearable) tactile experiences - allowing every spectator to feel the rhythm and intensity of sound.

3. AI Visual & AR Integration

Powered by NVIDIA Omniverse, Unreal Engine 5, and AI Vision Agents, our visuals adapt in real time to the artist’s energy and the crowd’s emotion - blending physical stage design with real-time generative 3D worlds.

4. Cross-Platform Connectivity

The technology bridges physical venues, metaverse environments, and streaming platforms - turning every show into an interconnected, data-driven, sensory network.

WHY IT'S REVOLUTIONARY

IMMERSIVE VIBES isn’t just another entertainment technology - it’s a new language of human-AI interaction.

By merging real-time AI, sensory hardware, and human emotion, we are creating a future where technology doesn’t separate people - it connects them more deeply.

Our model is scalable across industries - from music and entertainment to wellness, sports, education, and space exploration - redefining how humans experience the world through technology.

OUR UNIQUE AI MODEL

The Immersive Vibes AI model is not a single algorithm - it’s a living creative intelligence.

Trained on multimodal datasets that include sound frequencies, emotional cues, audience reactions, and motion capture data, it learns to create experiences rather than repeat them.

A truly adaptive system where every spectator experiences a personal emotional journey - and no two shows are ever the same.

KEY CAPABILITIES

Emotion Recognition - The AI reads audience energy and emotions in real time, adjusting tempo, visuals, and haptic intensity.

Generative Behavior - It creates dynamic audiovisual compositions, making each performance unique.

Biometric Feedback Loop - Sensors and wearables feed audience responses back to the system for instant adaptation.

Avatar Intelligence - Powers JOOLIA AI, enabling lifelike expression, movement, and emotional interaction on stage.